ChatGPT is dismissing it, but I’m not so sure.

Within return/rma window: Yes? Why not?

Seriously, do not use LLMs as a source of authority. They are stochistic machines predicting the next character they type; if what they say is true, it’s pure chance.

Use them to draft outlines. Use them to summarize meeting notes (and review the summaries). But do not trust them to give you reliable information. You may as well go to a party, find the person who’s taken the most acid, and ask them for an answer.

I’d say those SMART attributes don’t look great…

First sentence of each paragraph: correct.

Basically all the rest is bunk besides the fact that you can’t count on always getting reliable information. Right answers (especially for something that is technical but non-verifiable), wrong reasons.

There are “stochastic language models” I suppose (e.g., click the middle suggestion from your phone after typing the first word to create a message), but something like chatgpt or perplexity or deepseek are not that, beyond using tokenization / word2vect-like setups to make human readable text. These are a lot more like “don’t trust everything you read on Wikipedia” than a randomized acid drop response.

yeah, that’s why I’m here, dude.

So then, if you knew this, why did you bother to ask it first? I’m kinda annoyed and jealous of your AI friend over there. Are you breaking up with me?

Because it’s like a search box you can explain a problem to and get a bunch of words related to it without having to wade through blogspam, 10 year old Reddit posts, and snippy stackoverflow replies. You don’t have to post on discord and wait a day or two hoping someone will maybe come and help. Sure it is frequently wrong, but it’s often a good first step.

And no I’m not an AI bro at all, I frequently have coworkers dump AI slop in my inbox and ask me to take it seriously and I fucking hate it.

But once you have it’s output, unless you already know enough to judge if it’s correct or not you have to fall back to doing all those things you used the AI to avoid in order to verify what it told you.

Sure, but you at least have something to work with rather than whatever you know off the top of your head

It is not a search box. It generates words we know are confidently wrong quite often.

“Asking” gpt is like asking a magic 8 ball; it’s fun, but it has zero meaning.

Well that’s just blatantly false. They’re extremely useful for the initial stage of research when you’re not really sure where to begin or what to even look for. When you don’t know what you should read or even what the correct terminology is surrounding your problem. They’re “Language models”, which mean they’re halfway decent at working with language.

They’re noisy, lying plaigarism machines that have created a whole pandora’s box full of problems and are being shoved in many places where they don’t belong. That doesn’t make them useless in all circumstances.

Not false, and shame on you for suggesting it.

I not only disagree, but sincerely hope you aren’t encouraging anyone to look up information using an LLM.

LLMs are toys right now.

The part I’m calling out as untrue is the „magic 8 ball” comment, because it directly contradicts my own personal lived experience. Yes it’s a lying, noisy, plagiarism machine, but its accuracy for certain kinds of questions is better than a coin flip and the wrong answers can be useful as well.

Some recent examples

- I had it write an excel formula that I didn’t know how to write, but could sanity check and test.

- Worked through some simple, testable questions about setting up project references in a typescript project

- I want to implement URL previews in a web project but I didn’t know what the standard for that is called. Every web search I could think of related to „url previews” is full of SEO garbage I don’t care about, but ChatGPT immediately gave me the correct answer (Open Graph meta tags), easily verified by searching for that and reading the public documentation.

- Naming things is a famously hard problem in programming and LLMs are pretty good at „what’s another way to say” and „what’s it called when” type questions.

Just because you don’t have the problems that LLMs solve doesn’t mean that nobody else does. And also, dude, don’t scold people on the internet. The fediverse has a reputation and it’s not entirely a good one.

I doubted chatgpts input and I came here looking for help. What are you on about?

Dude, people here are such fucking cunts, you didn’t do anything wrong, ignore these 2 trogledytes who think they are semi intelligent. I’ve worked in IT nearly my whole life. I’d return it if you can.

Defensive… If someone asks you for advice, and says they have doubts about the answer they received from a Magic 8-Ball, how would you feel?

Very Doubtful

You don’t count, you would simply feel like a loaf.

Acid freaks are probably more reliable than chat gpt

You’ll certainly gain some valuable insight, even if it has nothing to do with your question. Which is more than I can say for LLMs.

I don’t understand the willingness to forgive error … Would you go to a person if you knew for a fact that 1 of 5 things they say is wrong?

If the person would answer almost instantly, 24/7, without being annoyed: Yes. Checking important information is easier once you know, what exactly to type.

I call them regurgitation machines prone to hallucinations.

That is a perfect description.

Just a reminder that LLMS can only truncate text, they are incapable of summarization.

Inside the nominal return period for a device absolutely.

If it’s a warranty repair I’ll wait for an actual trend, maybe run a burn-in on it and force its hand.

Yes, definitely.

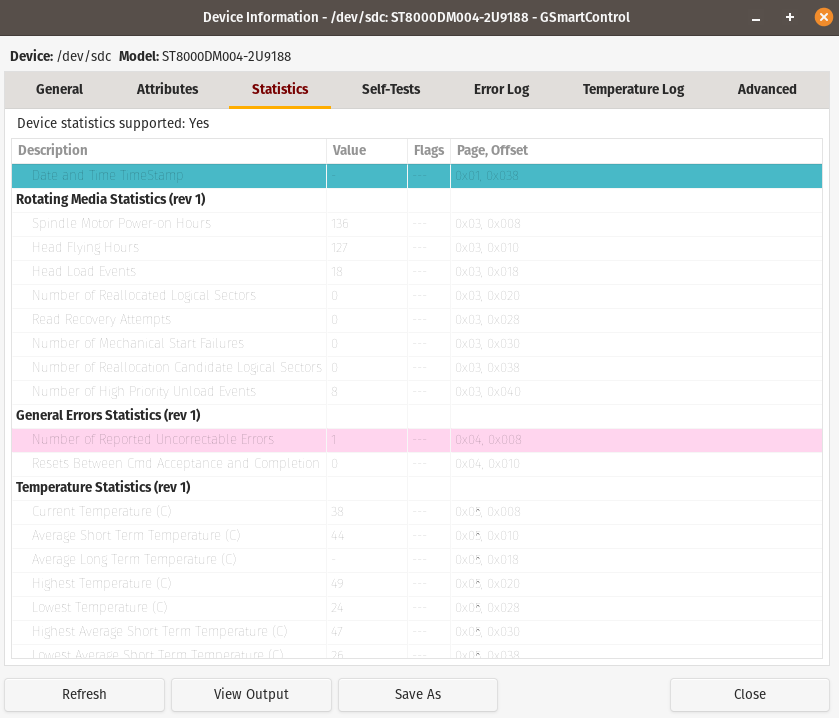

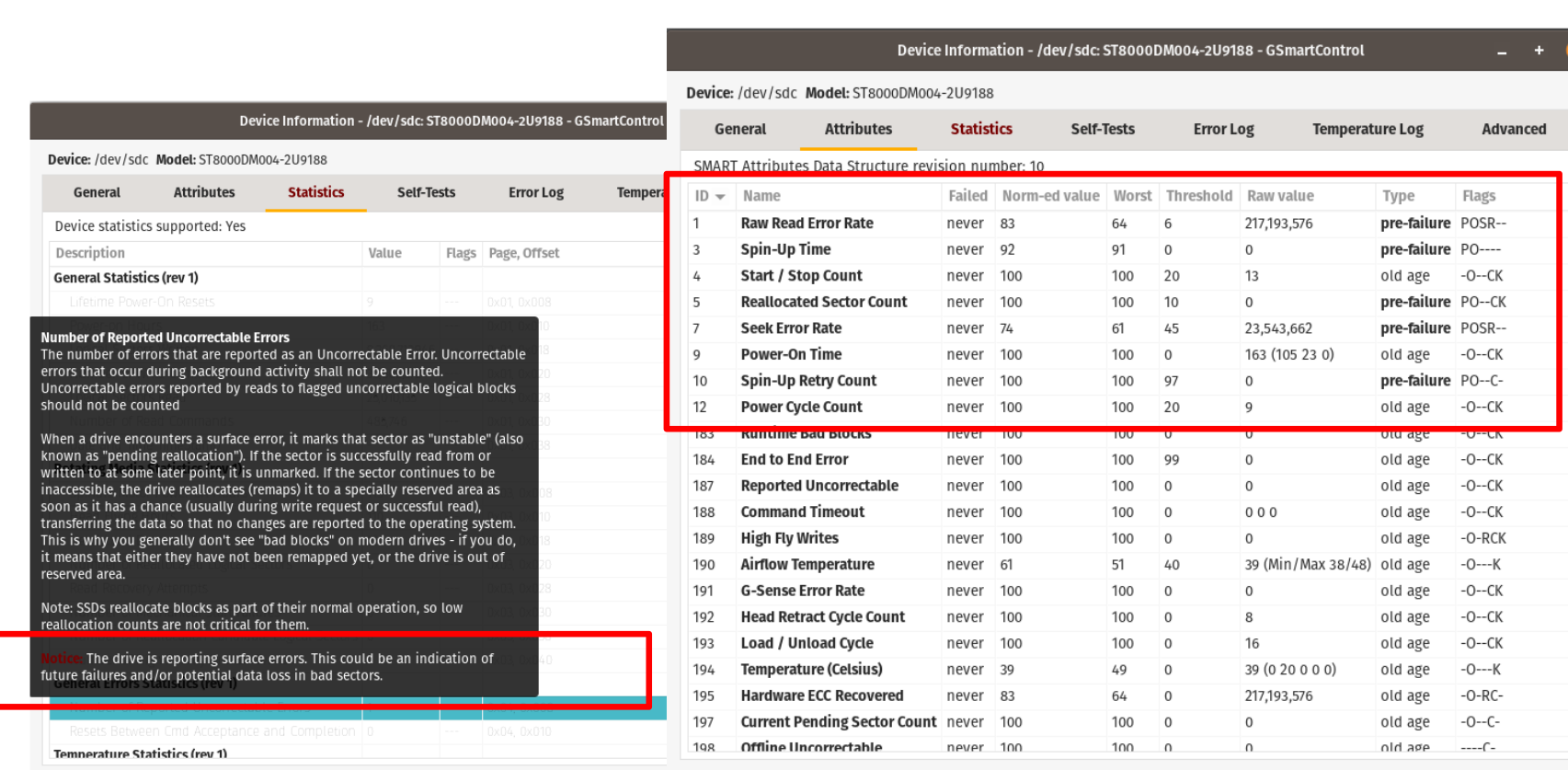

Seagate’s error rate values (IDs 1, 7, and 195) are busted. Not in that they’re wrong or anything, but that they’re misleading to people who don’t know exactly how to read them.

ALL of those are actually reporting zero errors. This calculator can confirm it for you: https://s.i.wtf/

Edit: As for the first image, I don’t see any attributes in #2 which match with it. It’s possible there is an issue with the drive, but it could also be something to do with the cable. I can’t tell with any confidence.

Edit Edit: I compared the values to my own Seagates and skimmed a manual. While I can’t say for sure what the error reported is in relation to, it is absolutely not to be ignored. If your return period ends very soon, stop here and just return it. If you have plenty of time, you may optionally investigate further to build a stronger case that the drive is a dud. My method is to run a test for bad blocks using this alternative method (https://wiki.archlinux.org/title/Badblocks#Alternatives), but the smart self-test listed would probably also spit errors if there are issues. If either fail, you have absolute proof the drive is a dud to send alongside the refund.

No testing/proof should be required to receive your refund, but if you can prove it’s a dud, you may just stop it from getting repacked and resold.

Already packed. I am also having trouble copying files due to issues with the file name (6 characters, nothing fancy). And it makes a ticking sound once in a while. I’m done with it.

SMART data can be hard to read. But it doesn’t look like any of the normalized values are approaching the failure thresholds. It doesn’t show any bad sectors. But it does show read errors.

I would check the cable first, make sure it’s securely connected. You said it clicks sometimes, but that could be normal. Check the kernel log/dmesg for errors. Keep an eye on the SMART values to see if they’re trending towards the failure thresholds.

Pro Tip: Never quote ChatGPT. Use it to find the real source of info and then quote that.

Never use ChatGPT anyway. There are better (for privacy) alternatives.

Phind.com is a great alternative that provides sources.

I get annoyed how putting a source in is not consistantly done with many of them. when you follow up and ask for them you generally get them but im going to look that over. I like when the answer looks like a wikipedia article with little number references.

I was quite impressed by how it looks and the free option ! However, seeing Google tag manager and tiktok analytics domains and I’m already out !