I wonder if my system is good or bad. My server needs 0.1kWh.

45 to 55 watt.

But I make use of it for backup and firewall. No cloud shit.

Between 50W (idle) and 140W (max load). Most of the time it is about 60W.

So about 1.5kWh per day, or 45kWh per month. I pay 0,22€ per kWh (France, 100% renewable energy) so about 9-10€ per month.

Are you including nuclear power in renewable or is that a particular provider who claims net 100% renewable?

Net 100% renewable, no nuclear. I can even choose where it comes from (in my case, a wind farm in northwest France). Of course, not all of my electricity come from there at all time, but I have the guaranty that renewable energy bounds equivalent to my consumption will be bought from there, so it is basically the same.

Thanks. I buy Vattenfall but make net 2/3rds of my own power via rooftop solar.

Mine runs at about 120 watts per hour.

Please. Watt is an SI unit of power, equivalent of Joule per second. Watt-hour is a non-SI unit of energy( 1Wh = 3600 J). Learn the difference and use it correctly.

My server uses about 6-7 kWh a day, but its a dual CPU Xeon running quite a few dockers. Probably the thing that keeps it busiest is being a file server for our family and a Plex server for my extended family (So a lot of the CPU usage is likely transcodes).

I came here to tell my tiny Raspberry pi 4 consumes ~10 watt, But then after noticing the home server setup of some people and the associated power consumption, I feel like a child in a crowd of adults 😀

we’re in the same boat, but it does the job and stays under 45°C even under load, so I’m not complaining

I have an old desktop downclocked that pulls ~100W that I’m using as a file server, but I’m working on moving most of my services over to an Intel NUC that pulls ~15W. Nothing wrong with being power efficient.

Quite the opposite. Look at what they need to get a fraction of what you do.

Or use the old quote, “they’re compensating for small pp”

I’m using an old laptop with the lid closed. Uses 10w.

All in, including my router, switches, modem, laptop, and NAS, I’m using 50watts +/- 5.

It does everything I need, and I feel like that’s pretty efficient.

Mate, kWh is a measure of electricity volume, like gallons is to liquid. Also, 100 watt hours would be a much more sensical way to say the same thing. What you’ve said in the title is like saying your server uses 1 gallon of water. It’s meaningless without a unit of time. Watts is a measure of current flow (pun intended), similar to a measurement like gallons per minute.

For example, if your server uses 100 watts for an hour it has used 100 watt hours of electricity. If your server uses 100 watts for 100 hours it has used 10000 watts of electricity, aka 10kwh.

My NAS uses about 60 watts at idle, and near 100w when it’s working on something. I use an old laptop for a plex server, it probably uses like 50 watts at idle and like 150 or 200 when streaming a 4k movie, I haven’t checked tbh. I did just acquire a BEEFY network switch that’s going to use 120 watts 24/7 though, so that’ll hurt the pocket book for sure. Soon all of my servers should be in the same place, with that network switch, so I’ll know exactly how much power it’s using.

With everything on, 100W but I don’t have my NAS on all the time and in that case I pull only 13W since my server is a laptop

kWh is a unit of energy, not power

I was really confused by that and that the decided units weren’t just in W (0.1 kW is pretty weird even)

Wh shouldn’t even exist tbh, we should use Joules, less confusing

Watt hours makes sense to me. A watt hour is just a watt draw that runs for an hour, it’s right in the name.

Maybe you’ve just whooooshed me or something, I’ve never looked into Joules or why they’re better/worse.

Joules (J) are the official unit of energy. 1W=1J/s. That means 1Wh=3600J or that 1J is kinda like “1 Watt second”. You’re right that Wh is easier since everything is rated in Watts and it would be insane to measure energy consumption by seconds. Imagine getting your electric bill and it says you’ve used 3,157,200,000J.

3,157,200,000J

Or just 3.1572GJ.

Which apparently is how this Canadian natural gas company bills its customers: https://www.fortisbc.com/about-us/facilities-operations-and-energy-information/how-gas-is-measured

I guess it wouldn’t make sense to measure energy used by gas-powered appliances in Wh since they’re not rated in Watts. Still, measuring volume and then converting to energy seems unnecessarily complicated.

Thanks for the explainer, that makes a lot of sense.

At least in the US, the electric company charges in kWh, computer parts are advertised in terms of watts, and batteries tend to be in amp hours, which is easy to convert to watt hours.

Joules just overcomplicates things.

deleted by creator

Wow, the US education system must be improved.

I pay my electric bill by the kWh too, and I don’t live in the US. When it comes to household and EV energy consumption, kWh is the unit of choice.

1J is 3600Wh.

No, if you’re going to lecture people on this, at least be right about facts. 1W is 1J/s. So multiply by an hour and you get 1Wh = 3600J

That’s literraly the same thing,

It’s not literally the same thing. The two units are linearly proportional to each other, but they’re not the same. If they were the same, then this discussion would be rather silly.

but the name is less confusing because people tend to confuse W and Wh

Finally, something I can agree with. But that’s only because physics is so undervalued in most educational systems.

Do you regularly divide/multiply by 3600? That’s not something I typically do in my head, and there’s no reason to do it when everything is denominated in watts. What exactly is the benefit?

I did a physics degree and am comfortable with Joules, but in the context of electricity bills, kWh makes more sense.

All appliances are advertised in terms of their Watt power draw, so estimating their daily impact on my bill is as simple as multiplying their kW draw by the number of hours in a day I expect to run the thing (multiplied by the cost per kWh by the utility company of course).

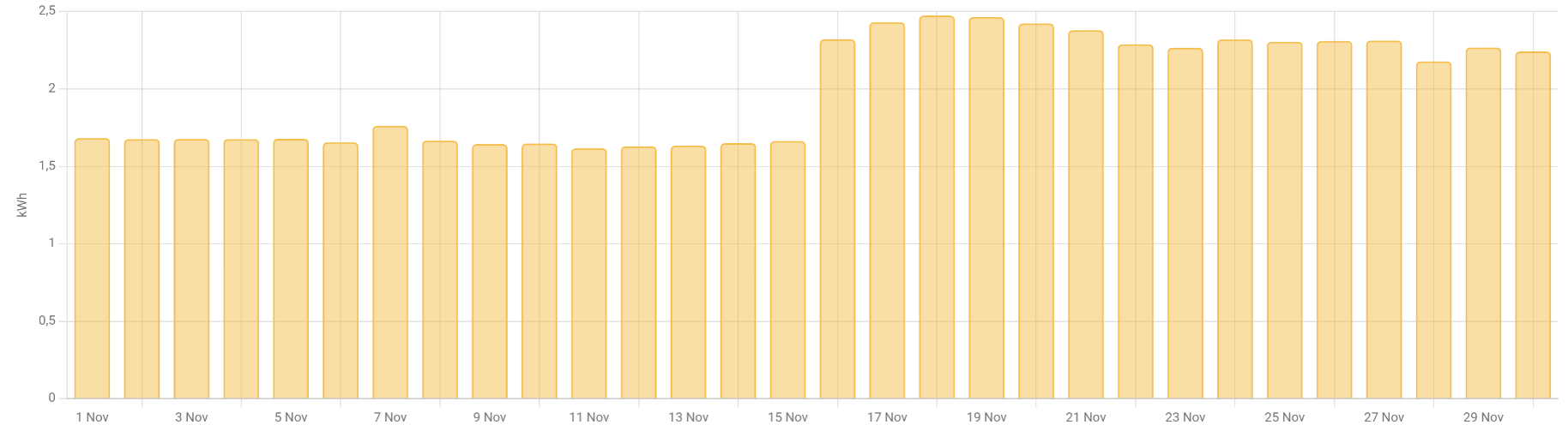

Wasn’t it stated for the usage during November? 60kWh for november. Seems logic to me.

Edit: forget it, he’s saying his server needs 0.1kWh which is bonkers ofc

Only one person here has posted its usage for November. The OP has not talked about November or any timeframe.

Yeah misxed up pists, thought one depended on another because it was under it. Again forget my post :-)

Idles at around 24W. It’s amazing that your server only needs .1kWh once and keeps on working. You should get some physicists to take a look at it, you might just have found perpetual motion.

.1kWh is 100Wh

This is a factual but irrelevant statement

I ate sushi today.

Good point. Now it does make sense. I know the secret to the perpetual motion machine now.

The PC I’m using as a little NAS usually draws around 75 watt. My jellyfin and general home server draws about 50 watt while idle but can jump up to 150 watt. Most of the components are very old. I know I could get the power usage down significantly by using newer components, but not sure if the electricity use outweighs the cost of sending them to the landfill and creating demand for more newer components to be manufactured.

Pulling around 200W on average.

- 100W for the server. Xeon E3-1231v3 with 8 spinning disks + HBA, couple of sata SSD’s

- ~80W for the unifi PoE 48 Pro switch. Most of this is PoE power for half a dozen cameras, downstream switches and AP’s, and a couple of raspberry pi’s

- ~20W for protectli vault running Opnsense

- Total usage measured via Eaton UPS

- Subsidised during the day with solar power (Enphase)

- Tracked in home assistant

For the whole month of November. 60kWh. This is for all my servers and network equipment. On average, it draws around 90 watt.

How you measuring this? Looks very neat.

Shelly plug, integrated into Home Assistant.

Looks like home assistant

You might have your units confused.

0.1kWh over how much time? Per day? Per hour? Per week?

Watthours refer to total power used to do something, from a starting point to an ending point. It makes no sense to say that a device needs a certain amount of Wh, unless you’re talking about something like charging a battery to full.

Power being used by a device, (like a computer) is just watts.

Think of the difference between speed and distance. Watts is how fast power is being used, watt-hours is how much has been used, or will be used.

If you have a 500 watt PC, for example, it uses 500Wh, per hour. Or 12kWh in a day.

I forgive 'em cuz watt hours are a disgusting unit in general

idea what unit speed change in position over time meters per second m/s acceleration change in speed over time meters per second, per second m/s/s=m/s² force acceleration applied to each of unit of mass kg * m/s² work acceleration applied along a distance, which transfers energy kg * m/s² * m = kg * m²/s² power work over time kg * m² / s³ energy expenditure power level during units of time (kg * m² / s³) * s = kg * m²/s² Work over time, × time, is just work! kWh are just joules (J) with extra steps! Screw kWh, I will die on this hill!!! Raaah

Could be worse, could be BTU. And some people still use tons (of heating/cooling).

Power over time could be interpreted as power/time. Power x time isn’t power, it’s energy (=== work). But otherwise I’m with you. Joules or gtfo.

Whoops, typo! Fixed c:

If you have a 500 watt PC, for example, it uses 500Wh, per hour. Or 12kWh in a day.

A maximum of 500 watts. Fortunately your PC doesn’t actually max out your PSU or your system would crash.

kWh is the stupidest unit ever. kWh = 1000J/s * 6060s = 3.610^6J so 0.1kWh = 360kJ

0.1kWh per hour? Day? Month?

What’s in your system?

Computer with gpu and 50TB drives. I will measure the computer on its own in the enxt couple of days to see where the power consumption comes from

You are misunderstanding the confusion, Kwh is an absolute measurement of an amount of power, not a rate of power usage. It’s like being asked how fast your car can go and answering it can go 500 miles. 500 miles per hour? Per day? Per tank? It doesn’t make sense as an answer.

Does your computer use 100 watt hours per hour? Translating to an average of 100 watts power usage? Or 100 watt hours per day maybe meaning an average power use of about 4 watts? One of those is certainly more likely but both are possible depending on your application and load.

Yeah but tbh it’s understandable that OP got confused. I think he just means 100W

He might, but he also might mean that he has a power meter that is displaying Kwh since last reset and he plugged it in and then checked it again later when it was all set up after an arbitrary time period and it was either showing the lowest non-zero value it was capable of displaying or was showing a number from several hours.

You’re adding to the confusion.

kWh (as in kW*h) and not kW/h is for measurement of energy.

Watt is for measurement of power.Lol thank you, I knew that I don’t know why I wrote it that way, in my defense it was like 4 in the morning.

They said kilawatt hours per how, not kilawatts per hour.

kWh/h = kW

The h can be cancelled, resulting in kW. They’re technically right, but kWh/h shouldn’t ever be used haha.

Which GPU? How many drives?

Put a kill-o-watt meter on it and see what it says for consumption.

17W for an N100 system with 4 HDD’s

That’s pretty low with 4 HDD’s. One of my servers use 30 watts. Half of that is from the 2 HDD’s in it.

@meldrik @qaz I’ve got a bunch of older, smaller drives, and as they fail I’m slowly transitioning to much more efficient (and larger) HGST helium drives. I don’t have measurements, but anecdotally a dual-drive USB dock with crappy 1.5A power adapter (so 18W) couldn’t handle spinning up two older drives but could handle two HGST drives.

Which HDDs? That’s really good.

Seagate Ironwolf “ST4000VN006”

I do have some issues with read speeds but that’s probably networking related or due to using RAID5.